Migrate databases to Kubernetes using Konveyor

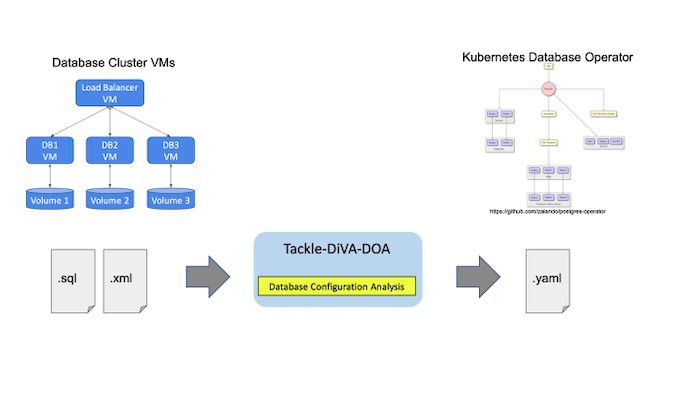

Kubernetes Database Operator is useful for building scalable database servers as a database (DB) cluster. But because you have to create new artifacts expressed as YAML files, migrating existing databases to Kubernetes requires a lot of manual effort. This article introduces a new open source tool named Konveyor Tackle-DiVA-DOA (Data-intensive Validity Analyzer-Database Operator Adaptation). It automatically generates deployment-ready artifacts for database operator migration. And it does that through datacentric code analysis.

What is Tackle-DiVA-DOA?

Tackle-DiVA-DOA (DOA, for short) is an open source datacentric database configuration analytics tool in Konveyor Tackle. It imports target database configuration files (such as SQL and XML) and generates a set of Kubernetes artifacts for database migration to operators such as Zalando Postgres Operator.

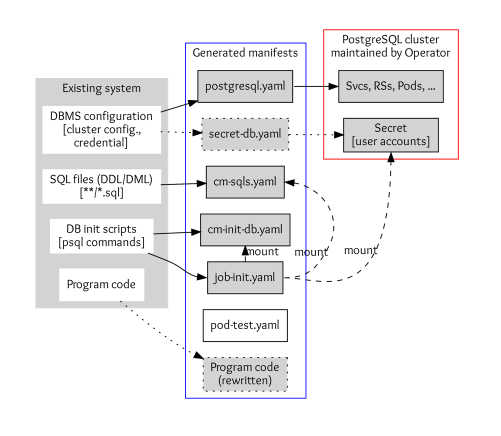

DOA finds and analyzes the settings of an existing system that uses a database management system (DBMS). Then it generates manifests (YAML files) of Kubernetes and the Postgres operator for deploying an equivalent DB cluster.

Database settings of an application consist of DBMS configurations, SQL files, DB initialization scripts, and program codes to access the DB.

- DBMS configurations include parameters of DBMS, cluster configuration, and credentials. DOA stores the configuration to

postgres.yamland secrets tosecret-db.yamlif you need custom credentials.

- SQL files are used to define and initialize tables, views, and other entities in the database. These are stored in the Kubernetes ConfigMap definition

cm-sqls.yaml.

- Database initialization scripts typically create databases and schema and grant users access to the DB entities so that SQL files work correctly. DOA tries to find initialization requirements from scripts and documents or guesses if it can’t. The result will also be stored in a ConfigMap named

cm-init-db.yaml.

- Code to access the database, such as host and database name, is in some cases embedded in program code. These are rewritten to work with the migrated DB cluster.

Tutorial

DOA is expected to run within a container and comes with a script to build its image. Make sure Docker and Bash are installed on your environment, and then run the build script as follows:

$ cd /tmp

$ git clone https://github.com/konveyor/tackle-diva.git

$ cd tackle-diva/doa

$ bash util/build.sh

…

docker image ls diva-doa

REPOSITORY TAG IMAGE ID CREATED SIZE

diva-doa 2.2.0 5f9dd8f9f0eb 14 hours ago 1.27GB

diva-doa latest 5f9dd8f9f0eb 14 hours ago 1.27GB

This builds DOA and packs as container images. Now DOA is ready to use.

The next step executes a bundled run-doa.shwrapper script, which runs the DOA container. Specify the Git repository of the target database application. This example uses a Postgres database in the TradeApp application. You can use the -o option for the location of output files and an -i option for the name of the database initialization script:

$ cd /tmp/tackle-diva/doa

$ bash run-doa.sh -o /tmp/out -i start_up.sh \

https://github.com/saud-aslam/trading-app

[OK] successfully completed.

The /tmp/out/ directory and /tmp/out/trading-app, a directory with the target application name, are created. In this example, the application name is trading-app, which is the GitHub repository name. Generated artifacts (the YAML files) are also generated under the application-name directory:

$ ls -FR /tmp/out/trading-app/

/tmp/out/trading-app/:

cm-init-db.yaml cm-sqls.yaml create.sh* delete.sh* job-init.yaml postgres.yaml test//tmp/out/trading-app/test:

pod-test.yaml

The prefix of each YAML file denotes the kind of resource that the file defines. For instance, each cm-*.yamlfile defines a ConfigMap, and job-init.yamldefines a Job resource. At this point, secret-db.yaml is not created, and DOA uses credentials that the Postgres operator automatically generates.

Now you have the resource definitions required to deploy a PostgreSQL cluster on a Kubernetes instance. You can deploy them using the utility script create.sh. Alternatively, you can use the kubectl createcommand:

$ cd /tmp/out/trading-app

$ bash create.sh # or simply “kubectl apply -f .”configmap/trading-app-cm-init-db created

configmap/trading-app-cm-sqls created

job.batch/trading-app-init created

postgresql.acid.zalan.do/diva-trading-app-db created

The Kubernetes resources are created, including postgresql (a resource of the database cluster created by the Postgres operator), service, rs, pod, job, cm, secret, pv, and pvc. For example, you can see four database pods named trading-app-*, because the number of database instances is defined as four in postgres.yaml.

$ kubectl get all,postgresql,cm,secret,pv,pvc

NAME READY STATUS RESTARTS AGE

…

pod/trading-app-db-0 1/1 Running 0 7m11s

pod/trading-app-db-1 1/1 Running 0 5m

pod/trading-app-db-2 1/1 Running 0 4m14s

pod/trading-app-db-3 1/1 Running 0 4mNAME TEAM VERSION PODS VOLUME CPU-REQUEST MEMORY-REQUEST AGE STATUS

postgresql.acid.zalan.do/trading-app-db trading-app 13 4 1Gi 15m RunningNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/trading-app-db ClusterIP 10.97.59.252 <none> 5432/TCP 15m

service/trading-app-db-repl ClusterIP 10.108.49.133 <none> 5432/TCP 15mNAME COMPLETIONS DURATION AGE

job.batch/trading-app-init 1/1 2m39s 15m

Note that the Postgres operator comes with a user interface (UI). You can find the created cluster on the UI. You need to export the endpoint URL to open the UI on a browser. If you use minikube, do as follows:

$ minikube service postgres-operator-ui

Then a browser window automatically opens that shows the UI.

(Yasuharu Katsuno and Shin Saito, CC BY-SA 4.0)

Now you can get access to the database instances using a test pod. DOA also generated a pod definition for testing.

$ kubectl apply -f /tmp/out/trading-app/test/pod-test.yaml # creates a test Pod

pod/trading-app-test created

$ kubectl exec trading-app-test -it -- bash # login to the pod

The database hostname and the credential to access the DB are injected into the pod, so you can access the database using them. Execute the psql metacommand to show all tables and views (in a database):

# printenv DB_HOST; printenv PGPASSWORD

(values of the variable are shown)# psql -h ${DB_HOST} -U postgres -d jrvstrading -c '\dt'

List of relations

Schema | Name | Type | Owner

--------+----------------+-------+----------

public | account | table | postgres

public | quote | table | postgres

public | security_order | table | postgres

public | trader | table | postgres

(4 rows)# psql -h ${DB_HOST} -U postgres -d jrvstrading -c '\dv'

List of relations

Schema | Name | Type | Owner

--------+-----------------------+------+----------

public | pg_stat_kcache | view | postgres

public | pg_stat_kcache_detail | view | postgres

public | pg_stat_statements | view | postgres

public | position | view | postgres

(4 rows)

After the test is done, log out from the pod and remove the test pod:

# exit

$ kubectl delete -f /tmp/out/trading-app/test/pod-test.yaml

Finally, delete the created cluster using a script:

$ bash delete.sh

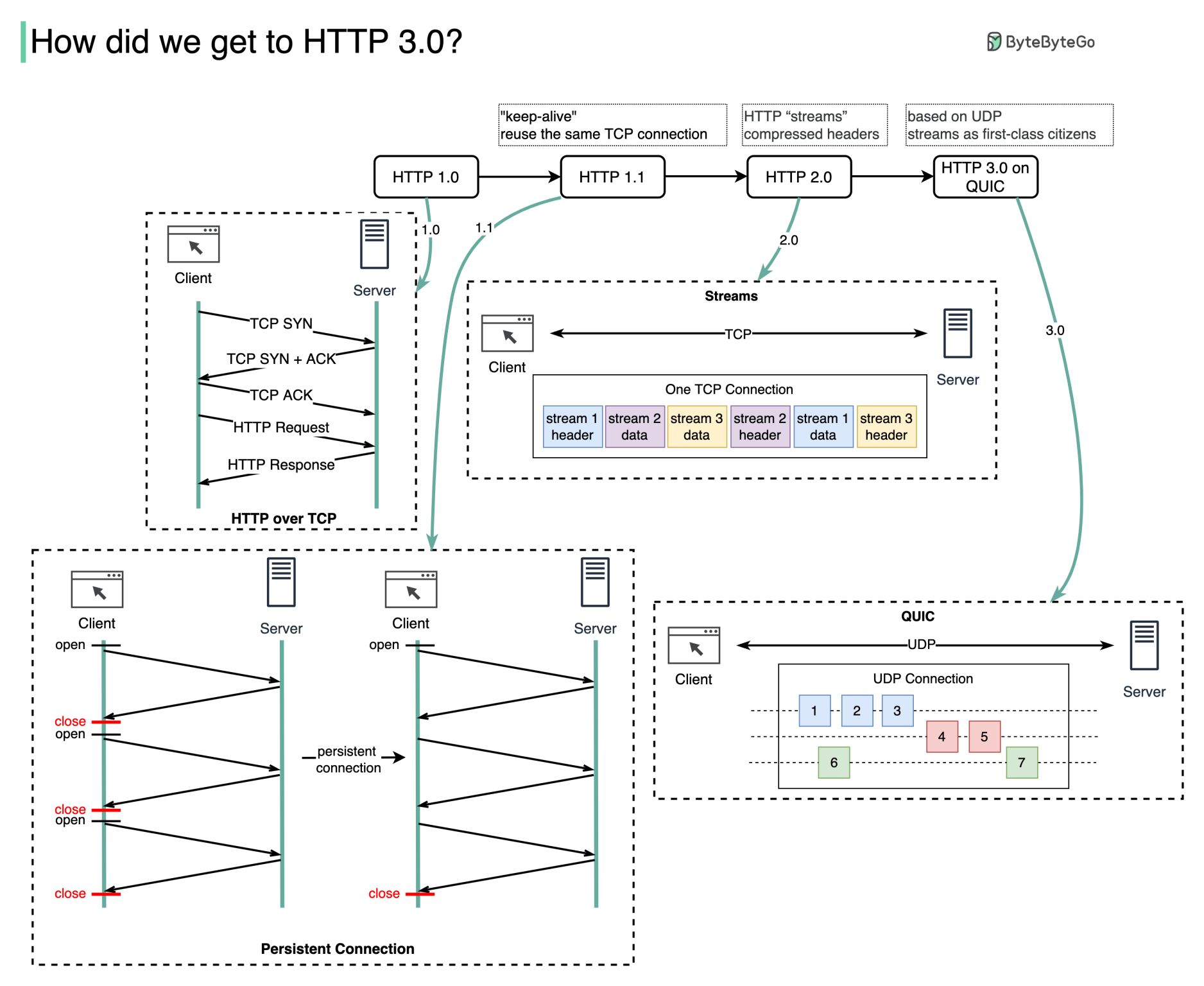

Why http 3.0 ?

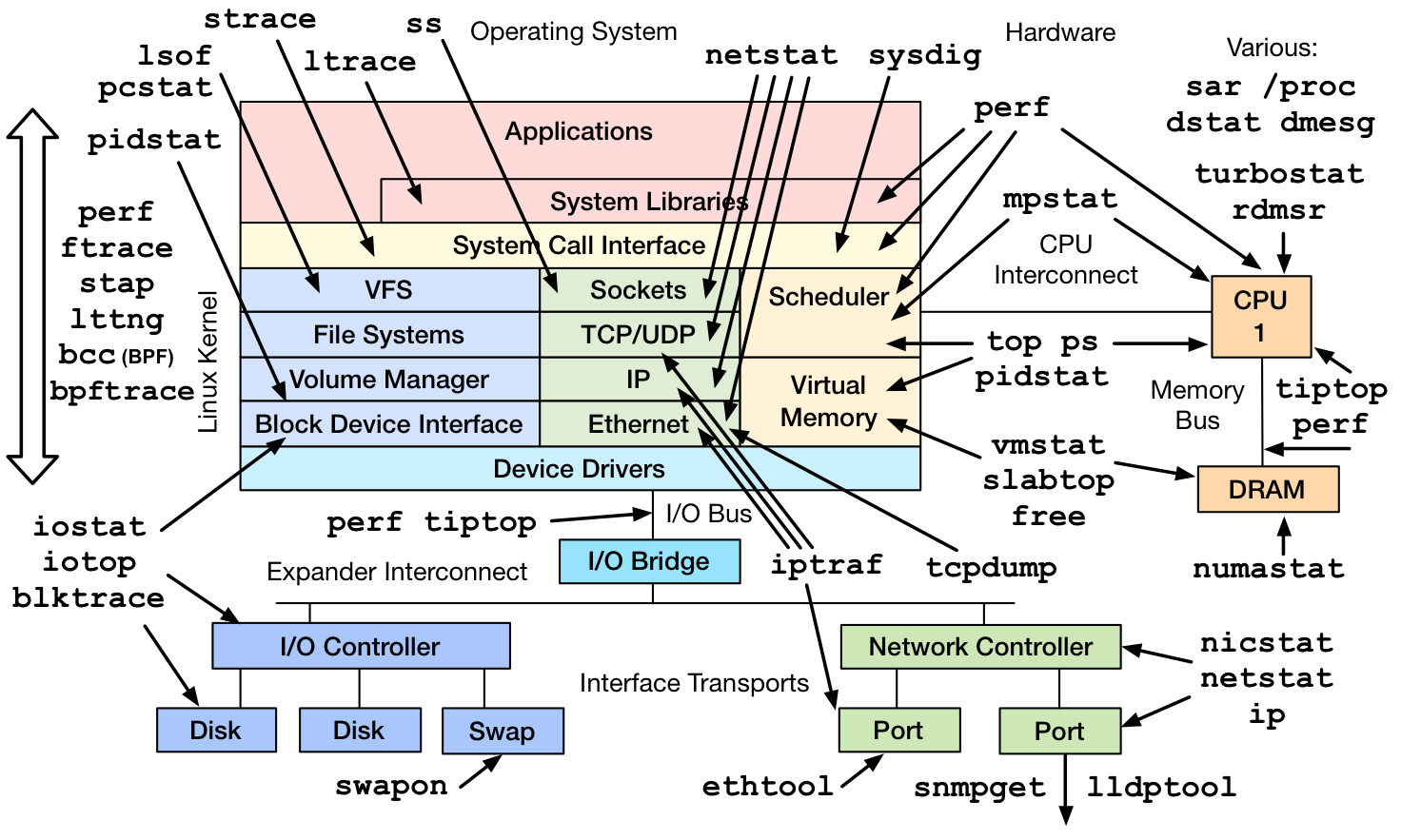

Selecting Performance Monitoring Tools

System monitoring is a helpful approach to provide the user with data regarding the actual timing behavior of the system. Users can perform further analysis using the data that these monitors provide. One of the goals of system monitoring is to determine whether the current execution meets the specified technical requirements.

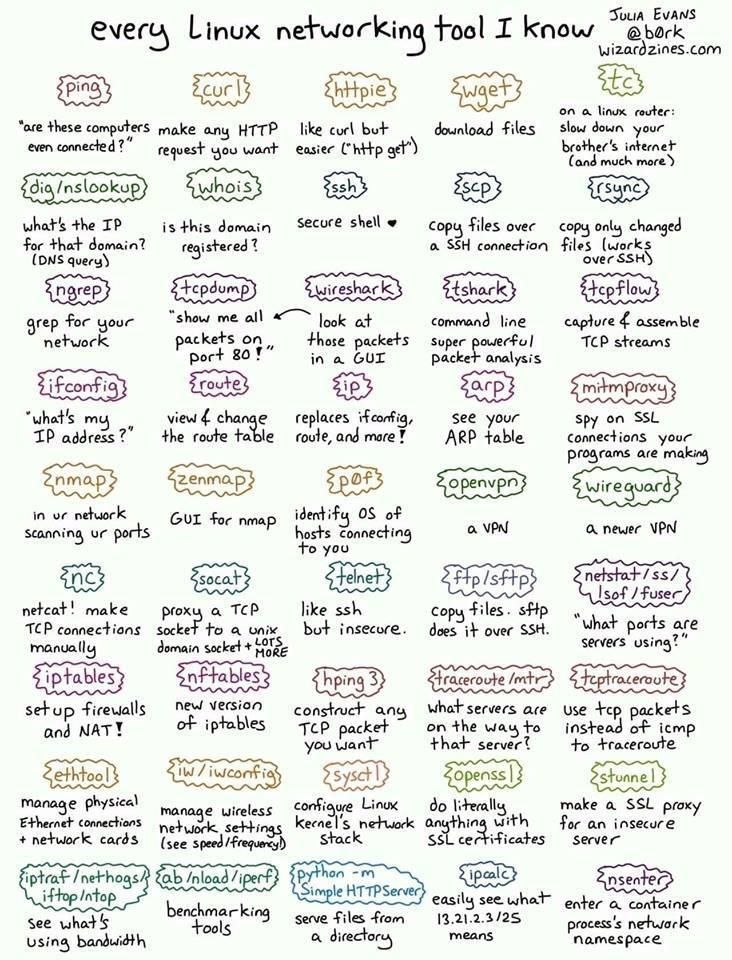

These monitoring tools retrieve commonly viewed information, and can be used by way of the command line or a graphical user interface, as determined by the system administrator. These tools display information about the Linux system, such as free disk space, the temperature of the CPU, and other essential components, as well as networking information, such as the system IP address and current rates of upload and download.

Monitoring Tools

The Linux kernel maintains counterstructures for counting events, that increment when an event occurs. For example, disk reads and writes, and process system calls, are events that increment counters with values stored as unsigned integers. Monitoring tools read these counter values. These tools provide either per process statistics maintained in process structures, or system-wide statistics in the kernel. Monitoring tools are typically viewable by non-privileged users. The ps and top commands provide process statistics, including CPU and memory.

Monitoring Processes Using the ps Command

Troubleshooting a system requires understanding how the kernel communicates with processes, and how processes communicate with each other. At process creation, the system assigns a state to the process.

Use the ps aux command to list all users with extended user-oriented details; the resulting list includes the terminal from which processes are started, as well as processes without a terminal. A ? sign in the TTY column represents that the process did not start from a terminal.

[user@host]$ps auxUSER PID %CPU %MEM VSZ RSSTTYSTAT START TIME COMMAND user 1350 0.0 0.2 233916 4808 pts/0 Ss 10:00 0:00 -bash root 1387 0.0 0.1 244904 2808?Ss 10:01 0:00 /usr/sbin/anacron -s root 1410 0.0 0.0 0 0 ? I 10:08 0:00 [kworker/0:2... root 1435 0.0 0.0 0 0 ? I 10:31 0:00 [kworker/1:1... user 1436 0.0 0.2 266920 3816 pts/0 R+ 10:48 0:00 ps aux

The Linux version of ps supports three option formats:

- UNIX (POSIX) options, which may be grouped and must be preceded by a dash.

- BSD options, which may be grouped and must not include a dash.

- GNU long options, which are preceded by two dashes.

The output below uses the UNIX options to list every process with full details:

[user@host]$ps -efUID PID PPID C STIME TTY TIME CMD root 2 0 0 09:57 ? 00:00:00 [kthreadd] root 3 2 0 09:57 ? 00:00:00 [rcu_gp] root 4 2 0 09:57 ? 00:00:00 [rcu_par_gp] ...output omitted...

Key Columns in ps OutputPID

This column shows the unique process ID.TIME

This column shows the total CPU time consumed by the process in hours:minutes:seconds format, since the start of the process.%CPU

This column shows the CPU usage during the previous second as the sum across all CPUs expressed as a percentage.RSS

This column shows the non-swapped physical memory that a process consumes in kilobytes in the resident set size, RSS column.%MEM

This column shows the ratio of the process’ resident set size to the physical memory on the machine, expressed as a percentage.

Use the -p option together with the pidof command to list the sshd processes that are running.

[user@host ~]$ps -p $(pidof sshd)PID TTY STAT TIME COMMAND 756 ? Ss 0:00 /usr/sbin/sshd -D [email protected]... 1335 ? Ss 0:00 sshd: user [priv] 1349 ? S 0:00 sshd: user@pts/0

Use the following command to list of all processes sorted by memory usage in descending order:

[user@host ~]$ps ax --format pid,%mem,cmd --sort -%memPID %MEM CMD 713 1.8 /usr/libexec/sssd/sssd_nss --uid 0 --gid 0 --logger=files 715 1.8 /usr/libexec/platform-python -s /usr/sbin/firewalld --nofork --nopid 753 1.5 /usr/libexec/platform-python -Es /usr/sbin/tuned -l -P 687 1.2 /usr/lib/polkit-1/polkitd --no-debug 731 0.9 /usr/sbin/NetworkManager --no-daemon ...output omitted...

Various other options are available for ps including the o option to customize the output and columns shown.

Monitoring Process Using top

The top command provides a real-time report of process activities with an interface for the user to filter and manipulate the monitored data. The command output shows a system-wide summary at the top and process listing at the bottom, sorted by the top CPU consuming task by default. The -n 1 option terminates the program after a single display of the process list. The following is an example output of the command:

[user@host ~]$top -n 1Tasks: 115 total, 1 running, 114 sleeping, 0 stopped, 0 zombie %Cpu(s): 0.0 us, 3.2 sy, 0.0 ni, 96.8 id, 0.0 wa, 0.0 hi, 0.0 si, 0.0 st MiB Mem : 1829.0 total, 1426.5 free, 173.6 used, 228.9 buff/cache MiB Swap: 0.0 total, 0.0 free, 0.0 used. 1495.8 avail Mem PID USER PR NI VIRT RES SHR S %CPU %MEM TIME+ COMMAND 1 root 20 0 243968 13276 8908 S 0.0 0.7 0:01.86 systemd 2 root 20 0 0 0 0 S 0.0 0.0 0:00.00 kthreadd 3 root 0 -20 0 0 0 I 0.0 0.0 0:00.00 rcu_gp ...output omitted...

Useful Key Combinations to Sort FieldsRES

Use Shift+M to sort the processes based on resident memory.PID

Use Shift+N to sort the processes based on process ID.TIME+

Use Shift+T to sort the processes based on CPU time.

Press F and select a field from the list to use any other field for sorting.

IMPORTANT

The top command imposes a significant overhead on the system due to various system calls. While running the top command, the process running the top command is often the top CPU-consuming process.

Monitoring Memory Usage

The free command lists both free and used physical memory and swap memory. The -b, -k, -m, -g options show the output in bytes, KB, MB, or GB, respectively. The -s option is passed as an argument that specifies the number of seconds between refreshes. For example, free -s 1 produces an update every 1 second.

[user@host ~]$free -mtotal used free shared buff/cache available Mem: 1829 172 1427 16 228 1496 Swap: 0 0 0

The near zero values in the buff/cache and available columns indicate a low memory situation. If the available memory is more than 20% of the total, and the used memory is close to the total memory, then these values indicate a healthy system.

Monitoring File System Usage

One stable identifier that is associated with a file system is its UUID, a very long hexadecimal number that acts as a universally unique identifier. This UUID is part of the file system and remains the same as long as the file system is not recreated. The lsblk -fp command lists the full path of the device, along with the UUIDs and mount points, as well as the type of file system in the partition. If the file system is not mounted, the mount point displays as blank.

[user@host ~]$lsblk -fpNAME FSTYPE LABEL UUID MOUNTPOINT /dev/vda ├─/dev/vda1 xfs 23ea8803-a396-494a-8e95-1538a53b821c /boot ├─/dev/vda2 swap cdf61ded-534c-4bd6-b458-cab18b1a72ea [SWAP] └─/dev/vda3 xfs 44330f15-2f9d-4745-ae2e-20844f22762d / /dev/vdb └─/dev/vdb1 xfs 46f543fd-78c9-4526-a857-244811be2d88

The findmnt command allows the user to take a quick look at what is mounted where, and with which options. Executing the findmnt command without any options lists out all the mounted file systems in a tree layout. Use the -s option to read the file systems from the /etc/fstab file. Use the -S option to search the file systems by the source disk.

[user@host ~]$findmnt -S /dev/vda1TARGET SOURCE FSTYPE OPTIONS / /dev/vda1 xfs rw,relatime,seclabel,attr2,inode64,noquota

The df command provides information about the total usage of the file systems. The -h option transforms the output into a human-readable form.

[user@host ~]$df -hFilesystem Size Used Avail Use% Mounted on devtmpfs 892M 0 892M 0% /dev tmpfs 915M 0 915M 0% /dev/shm tmpfs 915M 17M 899M 2% /run tmpfs 915M 0 915M 0% /sys/fs/cgroup /dev/vda1 10G 1.5G 8.6G 15% / tmpfs 183M 0 183M 0% /run/user/1000

The du command displays the total size of all the files in a given directory and its subdirectories. The -s option suppresses the output of detailed information and displays only the total. Similar to the df -h command, the -h option displays the output into a human-readable form.

[user@host ~]$du -sh /home/user16K /home/user

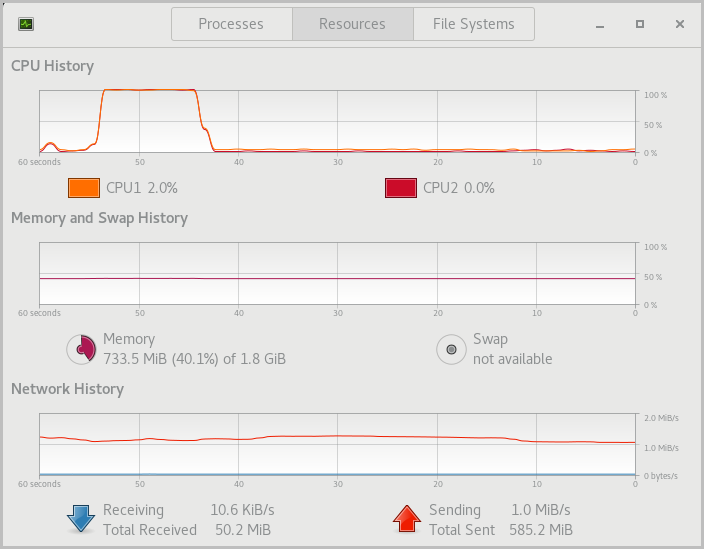

Using GNOME System Monitor

The System Monitor available on the GNOME desktop provides statistical data about the system status, load, and processes, as well as the ability to manipulate those processes. Similar to other monitoring tools, such as the top, ps, and free commands, the System Monitor provides both the system-wide and per-process data. These monitoring tools retrieve commonly viewed information, and can be used by way of the command line or a graphical user interface, as determined by the system administrator. Use the gnome-system-monitor command to access the application from a command terminal.

To view the CPU usage, go to the Resources tab and look at the CPU History chart.

|

Figure 2.2: CPU usage history in System Monitor

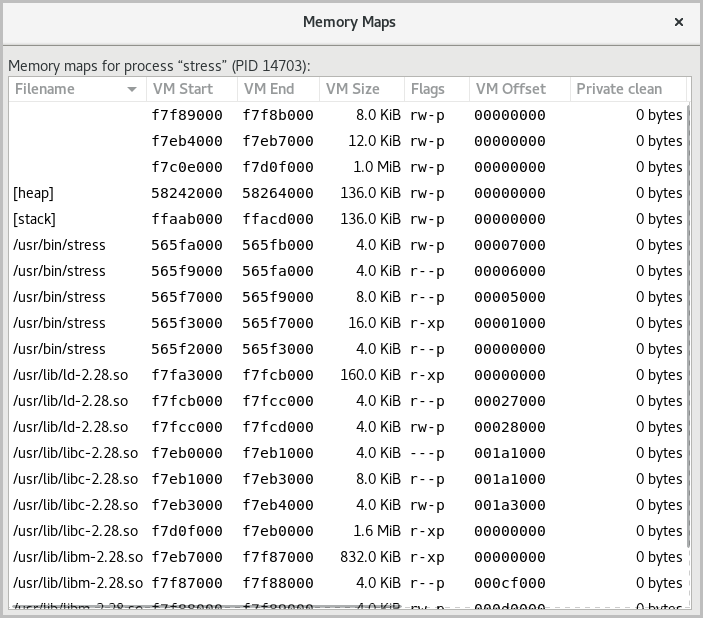

The virtual memory is the sum of the physical memory and the swap space in a system. A running process maps the location in physical memory to files on disk. The memory map displays the total virtual memory consumed by a running process, which determines the memory cost of running that process instance. The memory map also displays the shared libraries used by the process.

|

Figure 2.3: Memory map of a process in System Monitor

To display the memory map of a process in System Monitor, locate a process in the Processes tab, right-click a process in the list, and select Memory Maps.

6 Important things you need to run Kubernetes in production

Kubernetes adoption is at an all-time high. Almost every major IT organization invests in a container strategy and Kubernetes is by far the most-used and most popular container orchestration technology. While there are many flavors of Kubernetes, managed solutions like AKS, EKS and GKE are by far the most popular. Kubernetes is a very complex platform, but setting up a Kubernetes cluster is fairly easy as long as you choose a managed cloud solution. I would never advise self-managing a Kubernetes cluster unless you have a very good reason to do so.

Running Kubernetes comes with many benefits, but setting up a solid platform yourself without strong Kubernetes knowledge takes time. Setting up a Kubernetes stack according to best-practices requires expertise, and is necessary to set up a stable cluster that is future-proof. Simply running a manged cluster and deploying your application is not enough. Some additional things are needed to run a production-ready Kubernetes cluster. A good Kubernetes setup makes the life of developers a lot easier and gives them time to focus on delivering business value. In this article, I will share the most important things you need to run a Kubernetes stack in production.

1 – Infrastructure as Code (IaC)

First of all, managing your cloud infrastructure using Desired State configuration (Infrastructure as Code – IaC) comes with a lot of benefits and is a general cloud infrastructure best practice. Specifying it declarative as code will enable you to test your infrastructure (changes) in non-production environments. It discourages or prevents manual deployments, making your infrastructure deployments more consistent, reliable and repeatable. Teams implementing IaC deliver more stable environments rapidly and at scale. IaC tools like Terraform or Pulumi work great to deploy your entire Kubernetes cluster in your cloud of choice together with networking, load balancers, DNS configuration and of course an integrated Container Registry.

2 – Monitoring & Centralized logging

Kubernetes is a very stable platform. Its self-healing capabilities will solve many issues and if you don’t know where to look you wouldn’t even notice. However, that does not mean monitoring is unimportant. I have seen teams running production without proper monitoring, and suddenly a certificate expired, or a node memory overcommit caused an outage. You can easily prevent these failures with proper monitoring in place. Prometheus and Grafana are Kubernetes’ most used monitoring solutions and can be used to monitor both your platform and applications. Alerting (e.g. using the Alertmanager) should be set up for critical issues with your Kubernetes cluster, so that you can prevent downtime, failures or even data loss.

Apart from monitoring using metrics, it is also important to run centralized components like Fluentd or Filebeat to collect logging and send them to a centralized logging platform like ElasticSearch so that application error logs and log events can be traced and in a central place. These tools can be set up centrally, so standard monitoring is automatically in place for all apps without developer effort.

3 – Centralized Ingress Controller with SSL certificate management

Kubernetes has a concept of Ingress. A simple configuration that describes how traffic should flow from outside of Kubernetes to your application. A central Ingress Controller (e.g. Nginx) can be installed in the cluster to manage all incoming traffic for every application. When an Ingress Controller is linked to a public Cloud LoadBalancer, all traffic is automatically loadBalanced among Nodes, and sent to the right pods IP Addresses.

A Ingress Controller gives many benefits, because of its Centralization. It can also take care of HTTPS and SSL. An integrated component called cert-manager is a centrally deployed application in Kubernetes that takes care of HTTPS certificates. It can be configured using Let’s Encrypt, wildcard certificates or even a private Certification Authority for internal company-trusted certificates. All incoming traffic will be automatically encrypted using the HTTPS certificates and forwarded to the correct Kubernetes pods. Another thing developers won’t need to worry about.

4 – Role-Based Access Control (RBAC)

Not everyone should be a Kubernetes Administrator. We should always apply the principle of Least Privilege when it comes to Kubernetes access. Role-Based Access Control should be applied to the whole Kubernetes stack (Kubernetes API, deployment tools, dashboards, etc.). When we integrate Kubernetes with an IAM solution like Keycloak, Azure AD or AWS Cognito, we can centrally manage authentication and authorization using OAuth2 / OIDC for both platform tools and applications. Roles and groups can be defined to give users access to the resources they need to access based on their team or role.

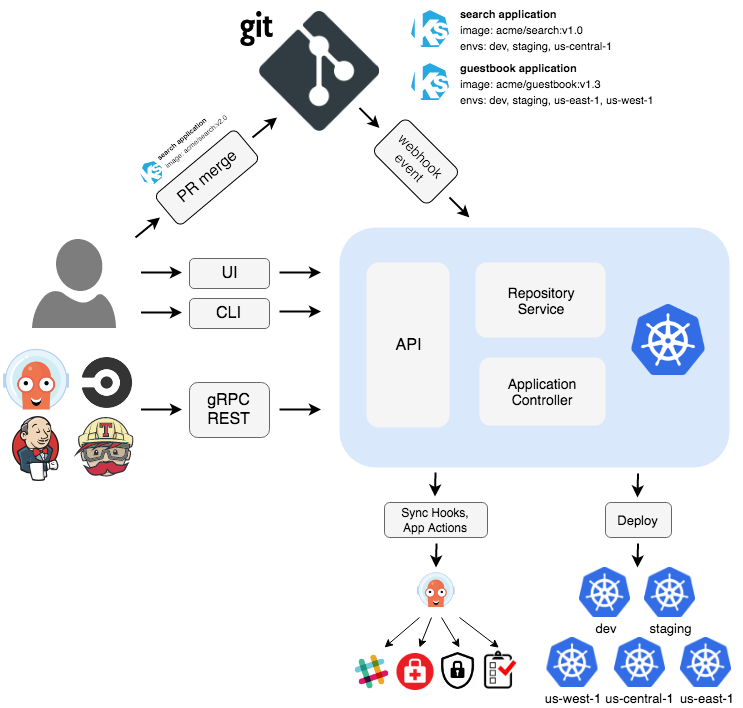

5 – GitOps Deployments

Everyone who works with Kubernetes uses kubectl one way or another. But manually deploying to Kubernetes using the ‘kubectl apply’ command is not a best practice, most certainly not in production. Kubernetes desired state configuration should be present in GIT, and we need a deployment platform that rolls out to Kubernetes. ArgoCD and Flux are the two leading GitOps platforms for Kubernetes deployments. Both work very well for handling real-time declarative state management, making sure that Git is the single source of truth for the Kubernetes state. Even if a rogue developer tries to manually change something in production, the GitOps platform will immediately roll back the change to the desired change. With a GitOps bootstrapping technique we can manage environments, teams, projects, roles, policies, namespaces, clusters, appgroups and applications. With Git only. GitOps makes sure that all changes to all Kubernetes environments are 100% traceable, easily automated and manageable.

6 – Secret Management

Kubernetes secret manifests are used to inject secrets into your containers, either as environment variables or file mappings. Preferably, not everyone should be able to access all secrets, especially in production. Using Role-Based Access Control on secrets for team members and applications is a security best practice. Secrets can be injected into Kubernetes using a CI / CD tooling or (worse) via a local development environment, but this can result in configuration state drift. This is not traceable, and not easily manageable. The best way to sync secrets is using a central vault, like Azure Key Vault, Hashicorp Vault, AWS Secrets Manager with a central secrets operator like External Secrets Operator. This way, secret references can be stored in GIT, pointing to an entry in an external secrets Vault. For more security-focused companies it is also an option to lock out all developers from secrets in Kubernetes using RBAC. They will be able to reference secrets, and use them in containers, but will never be able to directly access them.

Conclusion

Spinning-up a managed Kubernetes cluster is easy, but setting it up correctly takes time if you don’t have the expertise. It is very important to have a good Infrastructure as Code solution, proper monitoring, RBAC and Deployment mechanisms that are secure, manageable and traceable. The earlier, the better. Setting up your Kubernetes cluster according to best practices using standardized open source tooling will help you save time, failures and headaches, especially in the long run. Of course, these are the most basic requirements for your Kubernetes stack, especially for enterprise-level companies. Other important considerations that have not been mentioned are ServiceMesh, Security scanning / compliance, end-to-end traceability, which will be discussed in a future article.

If you don’t understand People, you don’t understand Business

Capire i Principi, il Modello Iterativo e le Chiavi

Prima di entrare nei dettagli di Enterprise Design Thinking, analizziamo i suoi principi fondamentali, scopriamo i modelli iterativi e diamo un’occhiata alle chiavi.

I principi guidano te ed il tuo gruppo

Guarda ai problemi e alle soluzioni come a una conversazione continua

Un focus sui risultati per gli utenti

Dai la priorità ai bisogni delle persone che useranno la tua soluzione. Il successo non è misurato da caratteristiche o funzioni ma è calcolato dal modo in cui sono soddisfatte le esigenze degli utenti.

Reinventarsi di continuo

Tutto è un prototipo! Tutto, anche i prodotti e le soluzioni già esistenti. Quando consideri qualsiasi cosa come un’ulteriore iterazione, puoi portare nuove idee anche ai problemi più vecchi.

Gruppi diversi responsabilizzati

Gruppi diversi generano più idee di quelli in cui tutti la pensano allo stesso modo, perché prospettive diverse generano idee differenti e aumentano le possibilità di fare un passo in avanti. Membri diversi di un team aiutano un gruppo ad accrescere le conoscenze e a trasformare le idee in risultati.

Il modello iterativo guida te e il tuo gruppo

Capire le esigenze degli utenti e ottenere risultati continui

Il Modello Iterativo spinge il team a comprendere il presente e immaginare il futuro in un ciclo continuo di osservazione, riflessione e realizzazione.

Quando i gruppi iniziano, si riuniscono per capire il presente e per immaginare il futuro. È compito di ciascuno imparare di più sul mondo degli utenti con l’osservazione, oppure iniziando subito a lavorare mettendo in pratica le idee.

Il Design Thinking considera tutto come un prototipo. Tutto è un prodotto non completato su cui si continuerà a lavorare e che verrà sempre reinventato. I gruppi possono osservare, riflettere e fare ripetutamente mentre cercano di risolvere un problema.

Le Chiavi allineano te e il tuo gruppo

Le Chiavi aiutano a mantenere i gruppi focalizzati e allineati sui risultati che interessano agli utenti

Enterprise Design Thinking introduce tre pratiche fondamentali delle chiavi che aiutano a risolvere problemi ben noti che IBM spesso registra in progetti complessi. Queste possono essere brevemente descritte come:

- Hills (colline), che ci allineano come gruppi.

- Playbacks (riproduzioni), che ci allineano nel tempo.

- Utenti Sponsor, che ci allineano con la loro realtà.

Le Chiavi aiutano a mantenere i gruppi concentrati sui risultati che contano per gli utenti e con le necessità del mondo reale.

Fai clic su ciascun titolo per saperne di più su queste pratiche.Le hill aiutano i gruppi a sincronizzarsi

Le Hill sono gli elementi di un piano di sviluppo che permettono al tuo progetto di focalizzarsi sui grandi problemi e sui risultati per gli utenti, e non solo su un elenco di richieste di funzionalità. Una hill indica dove andare, non come arrivarci, fornendo lo spazio necessario per generare idee innovative.

Lo scopo di una hill è garantire l’allineamento di tutto il gruppo su un obiettivo importante, dal punto di vista dell’utente finale. Dal momento che le hill definiscono l’ambito di un progetto, è importante che la specifica della hill sia fattibile nel tempo previsto. La specifica di ogni hill dovrebbe contenere le tre componenti: Chi? Cosa? e Wow.

Chi è l’utente principale?

– vale a dire, l’utente da servire

Cos’è che verrà consegnato?

– vale a dire, il risultato che si consente agli utenti di raggiungere

Wow è la differenza che renderà valida la tua soluzione.

– vale a dire, una descrizione di come questo risultato piacerà al cliente

Ecco alcuni esempi che mostrano come inserire le tre componenti in un’unica espressione:

Uno studente delle superiori [Chi] può produrre un progetto di storia di otto pagine [Cosa] in meno di 24 ore senza l’aiuto dei genitori [Wow!].

Credo che questa nazione [Chi] dovrebbe impegnarsi a raggiungere l’obiettivo [Cosa] […] di far sbarcare un uomo sulla Luna e riportarlo in salvo sulla Terra [Wow!]. – John F. Kennedy, presidente americano, 1961I playback aiutano i gruppi a restare sincronizzati

I Playback allineano il nostro gruppo, le parti interessate e i clienti attorno al valore che forniremo per l’utente piuttosto che sui singoli elementi del progetto. I playback sono presentati ai clienti e ai gruppi sotto forma di storie utente, garantendo che l’attenzione sia focalizzata sul valore erogato all’utente piuttosto che sui dettagli tecnici. Utilizzando i playback durante tutto il ciclo di rilascio, tutte le persone coinvolte rimangono informate e allineate. I playback avvengono in molti punti lungo il percorso.

Ecco alcune best practice da tenere a mente:

- Man mano che un gruppo sviluppa delle hill, un Hill Playback aiuta a garantire che tutti siano d’accordo sul risultato previsto del progetto.

- Una volta che il team ritiene di aver raggiunto una proposta di soluzione per ciascuna hill, pianifica il prossimo traguardo: il Playback Zero. Questa sessione è un momento in cui il team e le parti interessate concordano su ciò che il team si impegnerà effettivamente a fornire.

- Mentre si sviluppa la soluzione il team prepara vari Client Playback, dove presentare il piano, le tre hill e la esperienza utente che si intende offrire all’utente. In cambio, i clienti forniscono riscontri al gruppo per continuare a migliorare il piano.

Gli utenti sponsor aiutano a capire i “veri” utenti

Gli Utenti Sponsor non sono necessariamente i manager o i responsabili. Più comunemente sono gli utenti o i potenziali utenti che aiutano con la loro esperienza tutto il gruppo. Partecipano insieme al gruppo nel raggiungere un risultato che soddisfi le esigenze degli utenti finali.

Gli utenti sponsor non sostituiscono la ricerca, ma il loro punto di vista fornirà una conoscenza diretta delle esigenze specifiche degli utenti. Le loro esperienze aiutano a colmare il divario tra i presupposti del team e la realtà quotidiana dell’utente.

Helm vs Operator

When it comes to running complex application workloads on Kubernetes two technologies standout — Helm and Kubernetes Operators. In this post we compare them and discuss how they actually complement each other towards solving problems of day-1 and day-2 operations when it comes to running complex application workloads on Kubernetes. We also present guidelines for creating Helm charts for Operators.

What is Helm?

The basic idea of Helm is to enable reusability of Kubernetes YAML artifacts through templatization. Helm allows defining Kubernetes YAMLs with marked up properties. The actual values for these properties are defined in a separate file. Helm takes the templatized YAMLs and the values file and merges them before deploying the merged YAMLs into a cluster. The package consisting of templatized Kubernetes YAMLs and the values file is called a ‘Helm chart’. Helm project has gained considerable popularity as it solves one of the key problems that enterprises face — creating custom YAMLs for deploying the same application workload with different settings, or deploying it in different environments (dev/test/prod).

What is a Kubernetes Operator?

The basic idea of a Kubernetes Operator is to extend the Kubernetes’s level-driven reconciliation loop and API set towards running stateful application workloads natively on Kubernetes. A Kubernetes Operator consists of Kubernetes Custom Resource(s) / API(s) and Kubernetes Custom Controller(s). The Custom Resources represent an API that takes declarative inputs on the workload being abstracted and the Custom Controller implements corresponding actions on the workload. Kubernetes Operators are typically implemented in a standard programming language (Golang, Python, Java, etc.). An Operator is packaged as a container image and is deployed in a Kubernetes cluster using Kubernetes YAMLs. Once deployed, new Custom Resources (e.g. Mysql, Cassandra, etc.) are available to the end users similar to built-in Resources (e.g. Pod, Service, etc.). This allows them to orchestrate their application workflows more effectively leveraging additional Custom Resources.

Day-1 vs. Day-2 operations

Helm and Operators represent two different phases in managing complex application workloads on Kubernetes. Helm’s primary focus is on the day-1 operation of deploying Kubernetes artifacts in a cluster. The ‘domain’ that it understands is that of Kubernetes YAMLs that are composed of available Kubernetes Resources / APIs. Operators, on the other hand, are primarily focused on addressing day-2 management tasks of stateful / complex workloads such as Postgres, Cassandra, Spark, Kafka, SSL Cert Mgmt etc. on Kubernetes.

Both these mechanisms are complementary to deploying and managing such workloads on Kubernetes. To Helm, Operator deployment YAMLs represent one of the artifact types that can be potentially templatized and deployed with different settings and in different environments. To an Operator developer, Helm represents a standard tool to package, distribute and install Operator deployment YAMLs without tie-in to any Kubernetes vendor or distribution. As an Operator developer, it is tremendously useful for your users if you create Helm chart for its deployment. Below are some guidelines that you should follow when creating such Operator Helm charts.

Guidelines for creating Operator Helm charts

- Register CRDs in Helm chart (and not in Operator code)

As mentioned earlier, an Operator consists of one or more Custom Resources and associated Controller(s). In order for the Custom Resources to be recognized in a cluster, they need to be first registered in the cluster using Kubernetes’s meta API of ‘Custom Resource Definition (CRD)’. The CRD itself is a Kubernetes resource. Our first guideline is that registering the Custom Resources using CRDs should be done as Kubernetes YAML files in a Helm chart, rather than in Operator’s code (Golang/Python, etc.). The primary reason for this is that installing CRD YAML requires cluster-scope permission whereas an Operator Pod may not require cluster-scope permissions for its day-2 operations on an application workload. If you include CRD registration in your Operator’s code, then you will have to deploy your Operator Pod with cluster-scope permissions, which may come in the way of your security preferences. By defining CRD registration in Helm chart, you only need to give the Operator Pod those permissions that are necessary for it to perform the actual day-2 operations on the underlying application workload.

2. Make sure CRDs are getting installed prior to the Operator deployment

The second guideline is to define crd-install hook as part of the Operator Helm chart. What is this hook? ‘crd-install’ is a special annotation that you can add to your Helm chart’s CRD manifest. When installing a chart in a cluster, Helm installs any YAML manifests with this annotation before installing any other manifests of a chart. By adding this annotation you will ensure that the Custom Resources that your Operator works with are registered in the cluster before the Operator is deployed. This will prevent errors that happen if Operator starts running without its CRDs registered in the cluster. Note that in Helm 3.0, this hook is going away. Instead, CRDs are getting a special directory inside the charts directory.

3. Define Custom Resource validation rules in CRD YAML

Next guideline is about adding Custom Resource validation rules as part of CRD YAML. Kubernetes Custom Resources are designed to follow the Open API Spec. Additionally from Operator logic perspective, Custom Resource instances should have valid values for its defined Spec properties. You can define validity rules for the Custom Resource Spec properties as part of CRD YAML. These validation rules are used by Kubernetes machinery before a Custom Resource YAML reaches your Operator through one of the CRUD (Create, Read, Update, Delete) operations on the Custom Resource. This guideline helps prevent end user of the Custom Resource making unintentional errors while using it.

4. Use values.yaml or ConfigMaps for Operator configurables

Your Operator itself may need to be customized for different environments or even for different namespaces within a cluster. The customizations may range from small modifications, such as customizing the base MySQL image used by a MySQL Operator, to larger modifications such as installing different default set of plugins on different Moodle instances by a Moodle Operator. As mentioned earlier, Helm supports mechanisms of Spec property markers and Values.yaml file for templatization/customization. You can leverage these for small modifications that typically can be represented as singular properties in Operator deployment manifests. For supporting larger modifications to an Operator, consider passing the configuration data via ConfigMaps. The names of such ConfigMaps need to be passed to the Operator. For that you can use Helm’s property markers and Values.yaml file.

5. Add Platform-as-Code annotations to enable easy discovery and consumption of Operator’s Custom Resources

The last guideline that we have is to add ‘Platform-as-Code’ annotations on your Operator’s CRD YAML. There are two annotations that we recommend — ‘composition’ and ‘man’. The value of the ‘platform-as-code/composition’ annotation is listing of all Kubernetes built-in resources that are created by the Operator when the Custom Resource instance is created. The value of the ‘platform-as-code/man’ annotation is the name of a ConfigMap that packages ‘man page’ like usage information about the Custom Resource. Make sure to include this ConfigMap in your Operator’s Helm chart. These two annotations are part of our KubePlus API Add-on that simplifies discovery, binding and orchestration of Kubernetes Custom Resources to create platform workflows in typical multi-Operator environments.

Conclusion

Helm and Operators are complementary technologies. Helm is geared towards performing day-1 operations of templatization and deployment of Kubernetes YAMLs — in this case Operator deployment. Operator is geared towards handling day-2 operations of managing application workloads on Kubernetes. You will need both when running stateful / complex workloads on Kubernetes.

At CloudARK, we have been helping platform engineering teams build their custom platform stacks assembling multiple Operators to run their enterprise workloads. Our novel Platform-as-Code technology is designed to simplify workflow management in multi-Operator environments by bringing in consistency across Operators and simplifying consumption of Custom Resources towards defining required platform workflows. For this we have developed comprehensive Operator curation guidelines and open source Platform-as-Code tooling.

Reach out to us to find out how you too can build your Kubernetes Native platforms with Platform-as-Code approach.

Additional References:

Best 11 Kubernetes tool for 2021

Introduction

In this article I will try to summarize my favorite tools for Kubernetes with special emphasis on the newest and lesser known tools which I think will become very popular.

This is just my personal list based on my experience but, in order to avoid biases, I will try to also mention alternatives to each tool so you can compare and decide based on your needs. I will keep this article as short as I can and I will try to provide links so you can explore more on your own. My goal is to answer the question: “How can I do X in Kubernetes?” by describing tools for different software development tasks.

K3D

K3D is my favorite way to run Kubernetes(K8s) clusters on my laptop. It is extremely lightweight and very fast. It is a wrapper around K3S using Docker. So, you only need Docker to run it and it has a very low resource usage. The only problem is that it is not fully K8s compliant, but this shouldn’t be an issue for local development. For test environments you can use other solutions. K3D is faster than Kind, but Kind is fully compliant.

Alternatives

Krew

Krew is an essential tool to manage Kubectl plugins, this is a must have for any K8s user. I won’t go into the details of the more than 145 plugins available but at least install kubens and kubectx.

Lens

Lens is an IDE for K8s for SREs, Ops and Developers. It works with any Kubernetes distribution: on-prem or in the cloud. It is fast, easy to use and provides real time observability. With Lens it is very easy to manage many clusters. This is a must have if you are a cluster operator.

Alternatives

- K9sis an excellent choice for those who prefer a lightweight terminal alternative. K9s continually watches Kubernetes for changes and offers subsequent commands to interact with your observed resources.

Helm

Helm shouldn’t need an introduction, it is the most famous package manager for Kubernetes. And yes, you should use package managers in K8s, same as you use it in programming languages. Helm allows you to pack your application in Charts which abstract complex application into reusable simple components that are easy to define, install and update.

It also provides a powerful templating engine. Helm is mature, has lots of pre defined charts, great support and it is easy to use.

Alternatives

- Kustomize is a newer and great alternative for helm which does not use a templating engine but an overlay engine where you have base definitions and overlays on top of them.

ArgoCD

I believe that GitOps is one of the best ideas of the last decade. In software development, we should use a single source of truth to track all the moving pieces required to build software and Git is a the perfect tool to do that. The idea is to have a Git repository that contains the application code and also declarative descriptions of the infrastructure(IaC) which represent the desired production environment state; and an automated process to make the desired environment match the described state in the repository.

GitOps: versioned CI/CD on top of declarative infrastructure. Stop scripting and start shipping.

Although with Terraform or similar tools you can have your infrastructure as code(IaC), this is not enough to be able to sync your desired state in Git with production. We need a way to continuous monitor the environments and make sure there is no configuration drift. With Terraform you will have to write scripts that run terraform apply and check if the status matches the Terraform state but this is tedious and hard to maintain.

Kubernetes has been build with the idea of control loops from the ground up, this means that Kubernetes is always watching the state of the cluster to make sure it matches the desired state, for example, that the number of replicas running matches the desired number of replicas. The idea of GitOps is to extend this to applications, so you can define your services as code, for example, by defining Helm Charts, and use a tool that leverages K8s capabilities to monitor the state of your App and adjust the cluster accordingly. That is, if update your code repo, or your helm chart the production cluster is also updated. This is true continuous deployment. The core principle is that application deployment and lifecycle management should be automated, auditable, and easy to understand.

For me this idea is revolutionary and if done properly, will enable organizations to focus more on features and less on writing scripts for automation. This concept can be extended to other areas of Software Development, for example, you can store your documentation in your code to track the history of changes and make sure the documentation is up to date; or track architectural decision using ADRs.

In my opinion, the best GitOps tool in Kubernetes is ArgoCD. You can read more about here. ArgoCD is part of the Argo ecosystem which includes some other great tools, some of which, we will discuss later.

With ArgoCD you can have each environment in a code repository where you define all the configuration for that environment. Argo CD automates the deployment of the desired application state in the specified target environments.

Argo CD is implemented as a kubernetes controller which continuously monitors running applications and compares the current, live state against the desired target state (as specified in the Git repo). Argo CD reports and visualizes the differences and can automatically or manually sync the live state back to the desired target state.

Alternatives

- Flux which just released a new version with many improvements. It offers very similar functionality.

Argo Workflows and Argo Events

In Kubernetes, you may also need to run batch jobs or complex workflows. This could be part of your data pipeline, asynchronous processes or even CI/CD. On top of that, you may need to run even driven microservices that react to certain events like a file was uploaded or a message was sent to a queue. For all of this, we have Argo Workflows and Argo Events.

Although they are separate projects, they tend to be deployed together.

Argo Workflows is an orchestration engine similar to Apache Airflow but native to Kubernetes. It uses custom CRDs to define complex workflows using steps or DAGs using YAML which feels more natural in K8s. It has an nice UI, retries mechanisms, cron based jobs, inputs and outputs tacking and much more. You can use it to orchestrate data pipelines, batch jobs and much more.

Sometimes, you may want to integrate your pipelines with Async services like stream engines like Kafka, queues, webhooks or deep storage services. For example, you may want to react to events like a file uploaded to S3. For this, you will use Argo Events.

These two tools combines provide an easy and powerful solution for all your pipelines needs including CI/CD pipelines which will allow you to run your CI/CD pipelines natively in Kubernetes.

Alternatives

Kanico

We just saw how we can run Kubernetes native CI/CD pipelines using Argo Workflows. One common task is to build Docker images, this is usually tedious in Kubernetes since the build process actually runs on a container itself and you need to use workarounds to use the Docker engine of the host.

The bottom line is that you shouldn’t use Docker to build your images: use Kanico instead. Kaniko doesn’t depend on a Docker daemon and executes each command within a Dockerfile completely in userspace. This enables building container images in environments that can’t easily or securely run a Docker daemon, such as a standard Kubernetes cluster. This removes all the issues regarding building images inside a K8s cluster.

Istio

Istio is the most famous service mesh on the market, it is open source and very popular. I won’t go into details regarding what a service mesh is because it is a huge topic, but if you are building microservices, and probably you should, then you will need a service mesh to manage the communication, observability, error handling, security and all of the other cross cutting aspects that come as part of the microservice architecture. Instead of polluting the code of each microservice with duplicate logic, leverage the service mesh to do it for you.

In short, a service mesh is a dedicated infrastructure layer that you can add to your applications. It allows you to transparently add capabilities like observability, traffic management, and security, without adding them to your own code.

Istio if used to run microseconds and although you can run Istio and use microservices anywhere, Kubernetes has been proven over and over again as the best platform to run them. Istio can also extend your K8s cluster to other services such as VMs allowing you to have Hybrid environments which are extremely useful when migrating to Kubernetes.

Alternatives

- Linkerd is a lighter and probably faster service mesh. Linkerd is built for security from the ground up, ranging from features like on-by-default mTLS, a data plane that is built in a Rust, memory-safe language, and regular security audits

- Consul is a service mesh built for any runtime and cloud provider, so it works great for hybrid deployments across K8s and cloud providers. It is a great choice if not all your workloads run on Kubernetes.

Argo Rollouts

We mentioned already that you can use Kubernetes to run your CI/CD pipeline using Argo Workflows or a similar tools using Kanico to build your images. The next logical step is to continue and do continuous deployments. This is is extremely challenging to do in a real word scenario due to the high risk involved, that’s why most companies just do continuous delivery, which means that they have the automation in place but they still have manual approvals and verification, this manual step is cause by the fact that the team cannot fully trust their automation.

So how do you build that trust to be able to get rid of all the scripts and fully automate everything from source code all the way to production? The answer is: observability. You need to focus the resources more on metrics and gather all the data needed to accurately represent the state of your application. The goal is to use a set of metrics to build that trust. If you have all the data in Prometheus then you can automate the deployment because you can automate the progressive roll out of your application based on those metrics.

In short, you need more advanced deployment techniques than what K8s offers out of the box which are Rolling Updates. We need progressive delivery using canary deployments. The goal is to progressively route traffic to the new version of an application, wait for metrics to be collected, analyze them and match them against pre define rules. If everything is okay, we increase the traffic; if there are any issues we roll back the deployment.

To do this in Kubernetes, you can use Argo Rollouts which offers Canary releases and much more.

Argo Rollouts is a Kubernetes controller and set of CRDs which provide advanced deployment capabilities such as blue-green, canary, canary analysis, experimentation, and progressive delivery features to Kubernetes.

Although Service Meshes like Istio provide Canary Releases, Argo Rollouts makes this process much easier and developer centric since it was built specifically for this purpose. On top of that Argo Rollouts can be integrated with any service mesh.

Argo Rollouts Features:

- Blue-Green update strategy

- Canary update strategy

- Fine-grained, weighted traffic shifting

- Automated rollbacks and promotions or Manual judgement

- Customizable metric queries and analysis of business KPIs

- Ingress controller integration: NGINX, ALB

- Service Mesh integration: Istio, Linkerd, SMI

- Metric provider integration: Prometheus, Wavefront, Kayenta, Web, Kubernetes Jobs

Alternatives

- Istioas a service mesh for canary releases. Istio is much more than a progressive delivery tool, it is a full service mesh. Istio does not automate the deployment, Argo Rollouts can integrate with Istio to achieve this.

- Flagger is very similar to Argo Rollouts and it very well integrated with Flux, so if your ar using Flux consider Flagger.

- Spinnaker was the first continuous delivery tool for Kubernetes, it has many features but it is a bit more complicated to use and set up.

Crossplane

Crossplane is my new favorite K8s tool, I’m very exited about this project because it brings to Kubernetes a critical missing piece: manage 3rd party services as if they were K8s resources. This means, that you can provision a cloud provider databases such AWS RDS or GCP Cloud SQL like you would provision a database in K8s, using K8s resources defined in YAML.

With Crossplane, there is no need to separate infrastructure and code using different tools and methodologies. You can define everything using K8s resources. This way, you don’t need to learn new tools such as Terraform and keep them separately.

Crossplane is an open source Kubernetes add-on that enables platform teams to assemble infrastructure from multiple vendors, and expose higher level self-service APIs for application teams to consume, without having to write any code.

Crossplane extends your Kubernetes cluster, providing you with CRDs for any infrastructure or managed cloud service. Furthermore, it allows you to fully implement continuous deployment because contrary to other tools such Terraform, Crossplane uses existing K8s capabilities such as control loops to continuously watch your cluster and detect any configuration drifting acting on it automatically. For example, if you define a managed database instance and someone manually change it, Crossplane will automatically detect the issue and set it back to the previous value. This enforces infrastructure as code and GitOps principles. Crossplane works great with Argo CD which can watch the source code and make sure your code repo is the single source of truth and any changes in the code are propagated to the cluster and also external cloud services. Without Crossplane you could only implement GitOps in your K8s services but not your cloud services without using a separate process, now you can do this, which is awesome.

Alternatives

- Terraform which is the most famous IaC tool but it is not native to K8s, requires new skills and does not automatically watches from configuration drifts.

- Pulumi which is a Terraform alternative which works using programming languages that can be tested and understood by developers.

Knative

If you develop your applications in the cloud you probably have used some Serverless technologies such as AWS Lambdawhich is an event driven paradigm known as FaaS.

I already talked about Serverless in the past, so check my previous article to know more about this. The problem with Serverless is that it is tightly coupled to the cloud provider since the provider can create a great ecosystem for event driven applications.

For Kubernetes, if you want to run functions as code and use an event driven architecture, your best choice is Knative. Knative is build to run functions on Kubernetes creating an abstraction on top of a Pod.

Features:

- Focused API with higher level abstractions for common app use-cases.

- Stand up a scalable, secure, stateless service in seconds.

- Loosely coupled features let you use the pieces you need.

- Pluggable components let you bring your own logging and monitoring, networking, and service mesh.

- Knative is portable: run it anywhere Kubernetes runs, never worry about vendor lock-in.

- Idiomatic developer experience, supporting common patterns such as GitOps, DockerOps, ManualOps.

- Knative can be used with common tools and frameworks such as Django, Ruby on Rails, Spring, and many more.

Alternatives

- Argo Events provide an event-driven workflow engine for Kubernetes that can integrate with cloud engines such as AWS Lambda. It is not FaaS but provides an event driven architecture to Kubernetes.

kyverno

Kubernetes provides great flexibility in order to empower agile autonomous teams but with great power comes great responsibility. There has to be a set of best practices and rules to ensure a consistent and cohesive way to deploy and manage workloads which are compliant with the companies policies and security requirements.

There are several tools to enable this but none were native to Kubernetes… until now. Kyverno is a policy engine designed for Kubernetes, policies are managed as Kubernetes resources and no new language is required to write policies. Kyverno policies can validate, mutate, and generate Kubernetes resources.

match clause, an optional exclude clause, and one of a validate, mutate, or generate clause. A rule definition can contain only a single validate, mutate, or generate child node.You can apply any kind of policy regarding best practices, networking or security. For example, you can enforce that all your service have labels or all containers run as non root. You can check some policy examples here. Policies can be applied to the whole cluster or to a given namespace. You can also choose if you just want to audit the policies or enforce them blocking users from deploying resources.

Alternatives

- Open Policy Agent is a famous cloud native policy-based control engine. It used its own declarative language and it works in many environments, not only on Kubernetes. It is more difficult to manage than Kyvernobut more powerful.

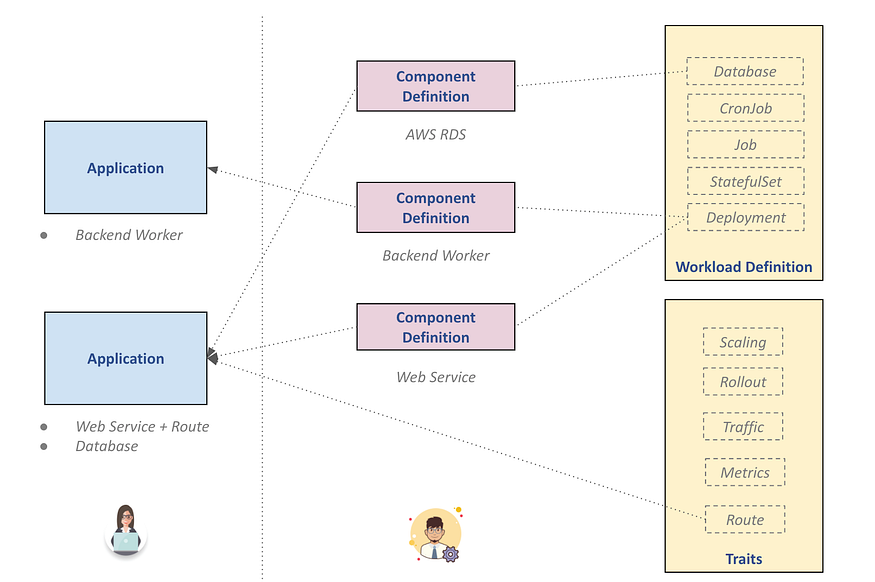

Kubevela

One problem with Kubernetes is that developers need to know and understand very well the platform and the cluster configuration. Many would argue that the level of abstraction in K8s is too low and this causes a lot of friction for developers who just want to focus on writing and shipping applications.

The Open Application Model (OAM) was created to overcome this problem. The idea is to create a higher level of abstraction around applications which is independent of the underlying runtime. You can read the spec here.

Focused on application rather than container or orchestrator, Open Application Model [OAM] brings modular, extensible, and portable design for modeling application deployment with higher level yet consistent API.

Kubevela is an implementation of the OAM model. KubeVela is runtime agnostic, natively extensible, yet most importantly, application-centric . In Kubevela applications are first class citizens implemented as Kubernetes resources. There is a distinction between cluster operators(Platform Team) and developers (Application Team). Cluster operators manage the cluster and the different environments by defining components(deployable/provisionable entities that compose your application like helm charts) and traits. Developers define applications by assembling components and traits.

KubeVela is a Cloud Native Computing Foundation sandbox project and although it is still in its infancy, it can change the way we use Kubernetes in the near future allowing developers to focus on applications without being Kubernetes experts. However, I do have some concerns regarding the applicability of the OAM in the real world since some services like system applications, ML or big data processes depend considerably on low level details which could be tricky to incorporate in the OAM model.

Alternatives

- Shipa follows a similar approach enabling platform and developer teams to work together to easily deploy application to Kubernetes.

Snyk

A very important aspect in any development process is Security, this has always been an issue for Kubernetes since companies who wanted to migrate to Kubernetes couldn’t easily implement their current security principles.

Snyk tries to mitigate this by providing a security framework that can easily integrate with Kubernetes. It can detect vulnerabilities in container images, your code, open source projects and much more.

Velero

If you run your workload in Kubernetes and you use volumes to store data, you need to create and manage backups. Velero provides a simple backup/restore process, disaster recovery mechanisms and data migrations.

Unlike other tools which directly access the Kubernetes etcd database to perform backups and restores, Velero uses the Kubernetes API to capture the state of cluster resources and to restore them when necessary. Additionally, Velero enables you to backup and restore your application persistent data alongside the configurations.

Schema Hero

Another common process in software development is to manage schema evolution when using relational databases.

SchemaHero is an open-source database schema migration tool that converts a schema definition into migration scripts that can be applied in any environment. It uses Kubernetes declarative nature to manage database schema migrations. You just specify the desired state and SchemaHero manages the rest.

Alternatives

- LiquidBase is the most famous alternative. It is more difficult to use and it’s not Kubernetes native but it has more features.

Bitnami Sealed Secrets

We already cover many GitOps tools such as ArgoCD. Our goal is to keep everything in Git and use Kubernetes declarative nature to keep the environments in sync. We just saw how we can (and we should) keep our source of truth in Git and have automated processes handle the configuration changes.

One thing that it was usually hard to keep in Git were secrets such DB passwords or API keys, this is because you should never store secrets in your code repository. One common solution is to use an external vault such as AWS Secret Manageror HashiCorpVaultto store the secrets but this creates a lot of friction since you need to have a separate process to handle secrets. Ideally, we would like a way to safely store secrets in Git just like any other resource.

Sealed Secrets were created to overcome this issue allowing you to store your sensitive data in Git by using strong encryption. Bitnami Sealed Secrets integrate natively in Kubernetes allowing you to decrypt the secrets only by the Kubernetes controller running in Kubernetes and no one else. The controller will decrypt the data and create native K8s secrets which are safely stored. This enables us to store absolutely everything as code in our repo allowing us to perform continuous deployment safely without any external dependencies.

Sealed Secrets is composed of two parts:

- A cluster-side controller

- A client-side utility:

kubeseal

The kubeseal utility uses asymmetric crypto to encrypt secrets that only the controller can decrypt. These encrypted secrets are encoded in a SealedSecret K8s resource that you can store in Git.

Alternatives

Capsule

Many companies use multi tenancy to manage different customers. This is quite common in software development but difficult to implement in Kubernetes. Namespaces are a great way to create logical partitions of the cluster as isolated slices but this is not enough in order to securely isolate customers, we need to enforce network policies, quotas and more. You can create network policies and rules per name space but this is a tedious process that it is difficult to scale. Also, tenants will not able to use more than one namespace which is a big limitation.

Hierarchical Namespaces were created to overcome some of these issues. The idea is to have a parent namespace per tenant with common network policies and quotas for the tenants and allow the creation of child namespaces. This is a great improvement but it does not have native support for a tenant in terms of security and governance. Furthermore, it hasn’t reach production status yet but version 1.0 is expected to be release in the next months.

A common approach to currently solve this, is to create a cluster per customer, this is secure and provides everything a tenant will need but this is hard to manage and very expensive.

Capsule is a tool which provides native Kubernetes support for multiple tenants within a single cluster. With Capsule, you can have a single cluster for all your tenants. Capsule will provide an “almost” native experience for the tenants(with some minor restrictions) who will be able to create multiple namespaces and use the cluster as it was entirely available for them hiding the fact that the cluster is actually shared.

In a single cluster, the Capsule Controller aggregates multiple namespaces in a lightweight Kubernetes abstraction called Tenant, which is a grouping of Kubernetes Namespaces. Within each tenant, users are free to create their namespaces and share all the assigned resources while the Policy Engine keeps the different tenants isolated from each other.

The Network and Security Policies, Resource Quota, Limit Ranges, RBAC, and other policies defined at the tenant level are automatically inherited by all the namespaces in the tenant similar to Hierarchical Namespaces. Then users are free to operate their tenants in autonomy, without the intervention of the cluster administrator. Capsule is GitOps ready since it is declarative and all the configuration can be stored in Git.

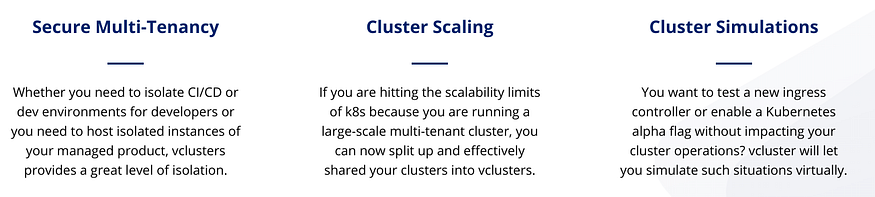

vCluster

VCluster goes one step further in terms of multi tenancy, it offers virtual clusters inside a Kubernetes cluster. Each cluster runs on a regular namespace and it is fully isolated. Virtual clusters have their own API server and a separate data store, so every Kubernetes object you create in the vcluster only exists inside the vcluster. Also, you can use kube context with virtual clusters to use them like regular clusters.

As long as you can create a deployment inside a single namespace, you will be able to create a virtual cluster and become admin of this virtual cluster, tenants can create namespaces, install CRDs, configure permissions and much more.

vCluster uses k3s as its API server to make virtual clusters super lightweight and cost-efficient; and since k3s clusters are 100% compliant, virtual clusters are 100% compliant as well. vclusters are super lightweight (1 pod), consume very few resources and run on any Kubernetes cluster without requiring privileged access to the underlying cluster. Compared to Capsule, it does use a bit more resources but it offer more flexibility since multi tenancy is just one of the use cases.

Other Tools

- kube-burner is used for stress testing. It provides metrics and alerts.

- kubewatch is used for monitoring but mainly focus on push notifications based on Kubernetes events like resource creation or deletion. It can integrate with many tools like Slack.

- kube-fledged is a Kubernetes add-on for creating and managing a cache of container images directly on the worker nodes of a Kubernetes cluster. As a result, application pods start almost instantly, since the images need not be pulled from the registry.

Conclusion

In this article we have reviewed my favorite Kubernetes tools. I focused on Open Source projects that can be incorporated in any Kubernetes distribution. I didn’t cover comercial solutions such as OpenShift or Cloud Providers Add-Ons since I wanted to keep i generic, but I do encourage to explore what your cloud provider can offer you if you run Kubernetes on the cloud or using a comercial tool.

My goal is to show you that you can do everything you do on-prem in Kubernetes. I also focused more in less known tools which I think may have a lot of potential such Crossplane, Argo Rollouts or Kubevela. The tools that I’m more excited about are vCluster, Crossplane and ArgoCD/Workflows.

Feel free to get in touch if you have any questions or need any advice.

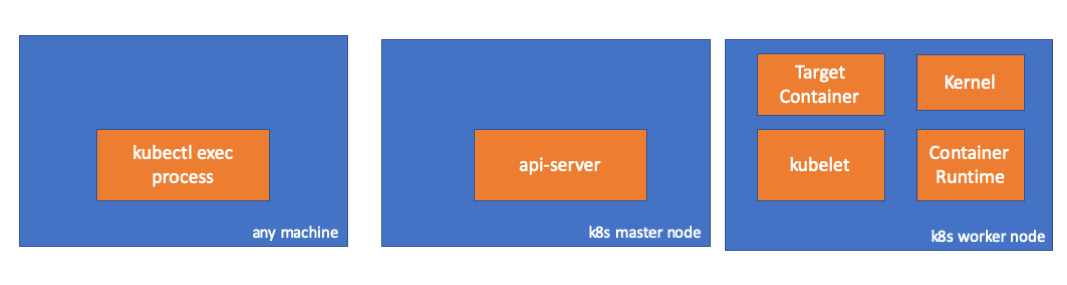

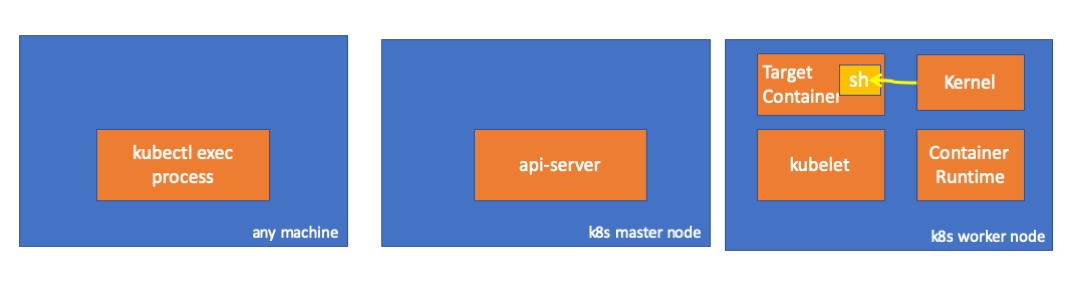

How does ‘kubectl exec’ work?

Last Friday, one of my colleagues approached me and asked a question about how to exec a command in a pod with client-go. I didn’t know the answer and I noticed that I had never thought about the mechanism in “kubectl exec”. I had some ideas about how it should be, but I wasn’t 100% sure. I noted the topic to check again and I have learnt a lot after reading some blogs, docs and source codes. In this blog post, I am going to share my understanding and findings.

Setup

I cloned https://github.com/ecomm-integration-ballerina/kubernetes-cluster in order to create a k8s cluster in my MacBook. I fixed IP addresses of the nodes in kubelet configurations since the default configuration didn’t let me run kubectl exec. You can find the root cause here.

- Any machine = my MacBook

- IP of master node = 192.168.205.10

- IP of worker node = 192.168.205.11

- API server port = 6443

Components

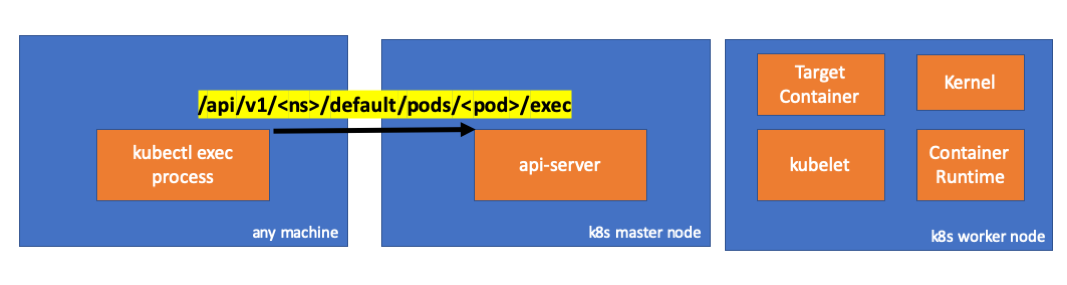

- kubectl exec process: When we run “kubectl exec …” in a machine, a process starts. You can run it in any machine which has an access to k8s api server.

- api server: Component on the master that exposes the Kubernetes API. It is the front-end for the Kubernetes control plane.

- kubelet: An agent that runs on each node in the cluster. It makes sure that containers are running in a pod.

- container runtime: The software that is responsible for running containers. Examples: docker, cri-o, containerd…

- kernel: kernel of the OS in the worker node which is responsible to manage processes.

- target container: A container which is a part of a pod and which is running on one of the worker nodes.

Findings

1. Activities in Client Side

- Create a pod in default namespace

// any machine $ kubectl run exec-test-nginx --image=nginx - Then run an exec command and

sleep 5000to make observation// any machine $ kubectl exec -it exec-test-nginx-6558988d5-fgxgg -- sh # sleep 5000 - We can observe the kubectl process (pid=8507 in this case)

// any machine $ ps -ef |grep kubectl 501 8507 8409 0 7:19PM ttys000 0:00.13 kubectl exec -it exec-test-nginx-6558988d5-fgxgg -- sh - When we check network activities of the process, we can see that it has some connections to api-server (192.168.205.10.6443)

// any machine $ netstat -atnv |grep 8507 tcp4 0 0 192.168.205.1.51673 192.168.205.10.6443 ESTABLISHED 131072 131768 8507 0 0x0102 0x00000020 tcp4 0 0 192.168.205.1.51672 192.168.205.10.6443 ESTABLISHED 131072 131768 8507 0 0x0102 0x00000028 - Let’s check the code. kubectl creates a POST request with subresource

execand sends a rest request. req := restClient.Post().

Resource(“pods”).

Name(pod.Name).

Namespace(pod.Namespace).

SubResource(“exec”)

req.VersionedParams(&corev1.PodExecOptions{

Container: containerName,

Command: p.Command,

Stdin: p.Stdin,

Stdout: p.Out != nil,

Stderr: p.ErrOut != nil,

TTY: t.Raw,

}, scheme.ParameterCodec)

return p.Executor.Execute(“POST”, req.URL(), p.Config, p.In, p.Out, p.ErrOut, t.Raw, sizeQueue)This Gist brought to you by gist-it.view rawstaging/src/k8s.io/kubectl/pkg/cmd/exec/exec.go

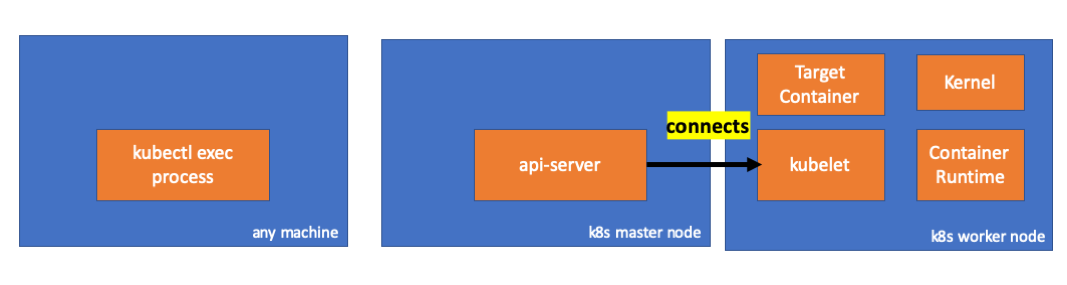

2. Activities in Master Node

- We can observe the request in api-server side as well.

handler.go:143] kube-apiserver: POST "/api/v1/namespaces/default/pods/exec-test-nginx-6558988d5-fgxgg/exec" satisfied by gorestful with webservice /api/v1 upgradeaware.go:261] Connecting to backend proxy (intercepting redirects) https://192.168.205.11:10250/exec/default/exec-test-nginx-6558988d5-fgxgg/exec-test-nginx?command=sh&input=1&output=1&tty=1 Headers: map[Connection:[Upgrade] Content-Length:[0] Upgrade:[SPDY/3.1] User-Agent:[kubectl/v1.12.10 (darwin/amd64) kubernetes/e3c1340] X-Forwarded-For:[192.168.205.1] X-Stream-Protocol-Version:[v4.channel.k8s.io v3.channel.k8s.io v2.channel.k8s.io channel.k8s.io]]Notice that the http request includes a protocol upgrade request. SPDY allows for separate stdin/stdout/stderr/spdy-error “streams” to be multiplexed over a single TCP connection. - Api server receives the request and binds it into a

PodExecOptions// PodExecOptions is the query options to a Pod’s remote exec call

type PodExecOptions struct {

metav1.TypeMeta

// Stdin if true indicates that stdin is to be redirected for the exec call

Stdin bool

// Stdout if true indicates that stdout is to be redirected for the exec call

Stdout bool

// Stderr if true indicates that stderr is to be redirected for the exec call

Stderr bool

// TTY if true indicates that a tty will be allocated for the exec call

TTY bool

// Container in which to execute the command.

Container string

// Command is the remote command to execute; argv array; not executed within a shell.

Command []string

}This Gist brought to you by gist-it.view rawpkg/apis/core/types.go - To be able to take necessary actions, api-server needs to know which location it should contact.// ExecLocation returns the exec URL for a pod container. If opts.Container is blank

// and only one container is present in the pod, that container is used.

func ExecLocation(

getter ResourceGetter,

connInfo client.ConnectionInfoGetter,

ctx context.Context,

name string,

opts *api.PodExecOptions,

) (*url.URL, http.RoundTripper, error) {

return streamLocation(getter, connInfo, ctx, name, opts, opts.Container, “exec”)

}This Gist brought to you by gist-it.view rawpkg/registry/core/pod/strategy.go

Of course the endpoint is derived from node info. nodeName := types.NodeName(pod.Spec.NodeName)

if len(nodeName) == 0 {

// If pod has not been assigned a host, return an empty location

return nil, nil, errors.NewBadRequest(fmt.Sprintf(“pod %s does not have a host assigned”, name))

}

nodeInfo, err := connInfo.GetConnectionInfo(ctx, nodeName)This Gist brought to you by gist-it.view rawpkg/registry/core/pod/strategy.go

GOTCHA! KUBELET HAS A PORT (node.Status.DaemonEndpoints.KubeletEndpoint.Port) TO WHICH API-SERVER CAN CONNECT.// GetConnectionInfo retrieves connection info from the status of a Node API object.

func (k *NodeConnectionInfoGetter) GetConnectionInfo(ctx context.Context, nodeName types.NodeName) (*ConnectionInfo, error) {

node, err := k.nodes.Get(ctx, string(nodeName), metav1.GetOptions{})

if err != nil {

return nil, err

}

// Find a kubelet-reported address, using preferred address type

host, err := nodeutil.GetPreferredNodeAddress(node, k.preferredAddressTypes)

if err != nil {

return nil, err

}

// Use the kubelet-reported port, if present

port := int(node.Status.DaemonEndpoints.KubeletEndpoint.Port)

if port <= 0 {

port = k.defaultPort

}

return &ConnectionInfo{

Scheme: k.scheme,

Hostname: host,

Port: strconv.Itoa(port),

Transport: k.transport,

}, nil

}This Gist brought to you by gist-it.view rawpkg/kubelet/client/kubelet_client.go

Master-Node Communication > Master to Cluster > apiserver to kubeletThese connections terminate at the kubelet’s HTTPS endpoint. By default, the apiserver does not verify the kubelet’s serving certificate, which makes the connection subject to man-in-the-middle attacks, and unsafe to run over untrusted and/or public networks. - Now, api server knows the endpoint and it opens a connections.// Connect returns a handler for the pod exec proxy

func (r *ExecREST) Connect(ctx context.Context, name string, opts runtime.Object, responder rest.Responder) (http.Handler, error) {

execOpts, ok := opts.(*api.PodExecOptions)

if !ok {

return nil, fmt.Errorf(“invalid options object: %#v”, opts)

}

location, transport, err := pod.ExecLocation(r.Store, r.KubeletConn, ctx, name, execOpts)

if err != nil {

return nil, err

}

return newThrottledUpgradeAwareProxyHandler(location, transport, false, true, true, responder), nil

}This Gist brought to you by gist-it.view rawpkg/registry/core/pod/rest/subresources.go - Let’s check what is going on the master node.

First, learn the ip of the worker node. It is 192.168.205.11 in this case.

// any machine

$ kubectl get nodes k8s-node-1 -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

k8s-node-1 Ready <none> 9h v1.15.3 192.168.205.11 <none> Ubuntu 16.04.6 LTS 4.4.0-159-generic docker://17.3.3

Then get the kubelet port. It is 10250 in this case.

// any machine

$ kubectl get nodes k8s-node-1 -o jsonpath='{.status.daemonEndpoints.kubeletEndpoint}'

map[Port:10250]

Then check the network. Is there a connection to worker node(192.168.205.11)? THE CONNECTİON IS THERE. When I kill the exec process, it disappears so I know it is set by api-server because of my exec command.

// master node

$ netstat -atn |grep 192.168.205.11

tcp 0 0 192.168.205.10:37870 192.168.205.11:10250 ESTABLISHED

...

- Now the connection between kubectl and api-server is still open and there is another connection between api-server and kubelet.

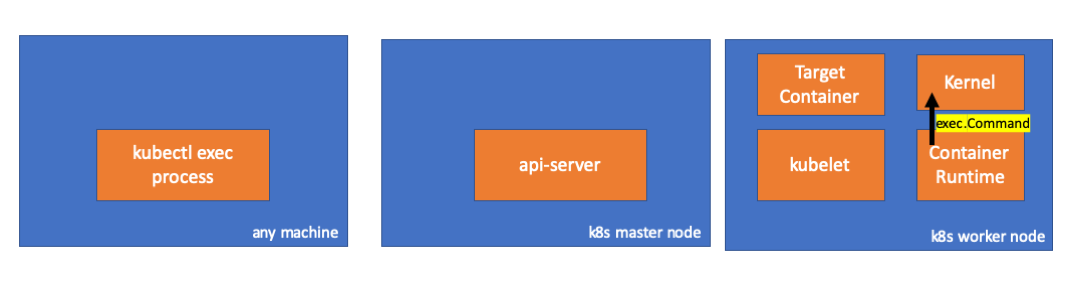

3. Activities in Worker Node

- Let’s continue by connecting to the worker node and checking what is going on the worker node.

First, we can observe the connection here as well. The second line. 192.168.205.10 is the IP of master node.

// worker node

$ netstat -atn |grep 10250

tcp6 0 0 :::10250 :::* LISTEN

tcp6 0 0 192.168.205.11:10250 192.168.205.10:37870 ESTABLISHED

What about our sleep command? HOORAYYYY!! OUR COMMAND IS THERE!!!!

// worker node

$ ps -afx

...

31463 ? Sl 0:00 \_ docker-containerd-shim 7d974065bbb3107074ce31c51f5ef40aea8dcd535ae11a7b8f2dd180b8ed583a /var/run/docker/libcontainerd/7d974065bbb3107074ce31c51

31478 pts/0 Ss 0:00 \_ sh

31485 pts/0 S+ 0:00 \_ sleep 5000

...

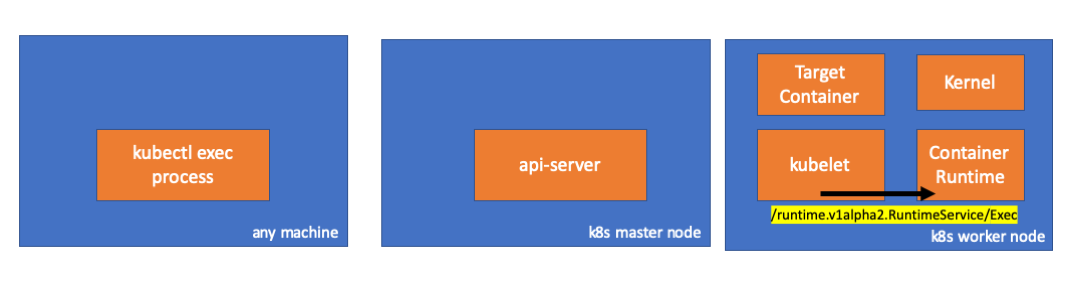

- Wait! How did kubelet do it?

- kubelet has a daemon which serves an api over a port for api-server requests.// Server is the library interface to serve the stream requests.

type Server interface {

http.Handler

// Get the serving URL for the requests.

// Requests must not be nil. Responses may be nil iff an error is returned.

GetExec(*runtimeapi.ExecRequest) (*runtimeapi.ExecResponse, error)

GetAttach(req *runtimeapi.AttachRequest) (*runtimeapi.AttachResponse, error)

GetPortForward(*runtimeapi.PortForwardRequest) (*runtimeapi.PortForwardResponse, error)

// Start the server.